Redis Streams

As part of making small changes each day, chose to pick up a small implementation with Redis streams today. The idea was to pick up something I have not explored rather than sharpen the same toolkit.

At the outset, the question was why Redis streams and why not use PubSub itself. This question came up because of the frequency with which I use PubSub and event driven architectures in general. A common case would be say, an order service that talks to a payments service, and then to a procurement service for interfacing with vendors.

Our stack at Plum used Redis bull as a wrapper to handle such flows — a consumer or two for handling procurement flows. When I was reworking the stack post Xoxo, I had found PubSub to be a good way to handle this without drops too. But, there’s something that Streams provide — a set in stone event log for audits. For this very reason, this has been something that I have always wanted to get my hands dirty with but never got around to.

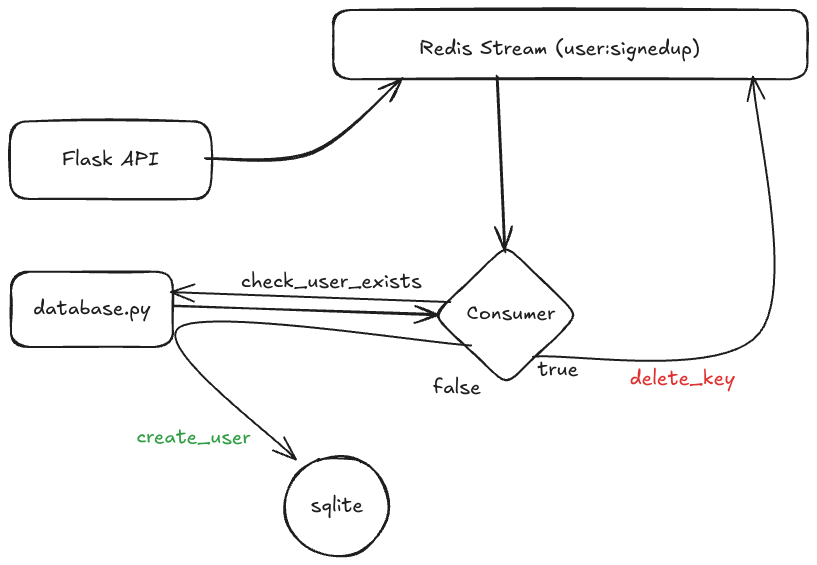

This morning, sat with some Python code after long for the Streams workflow — a simple signup API that pushes requests to a stream and a consumer that can read from it.

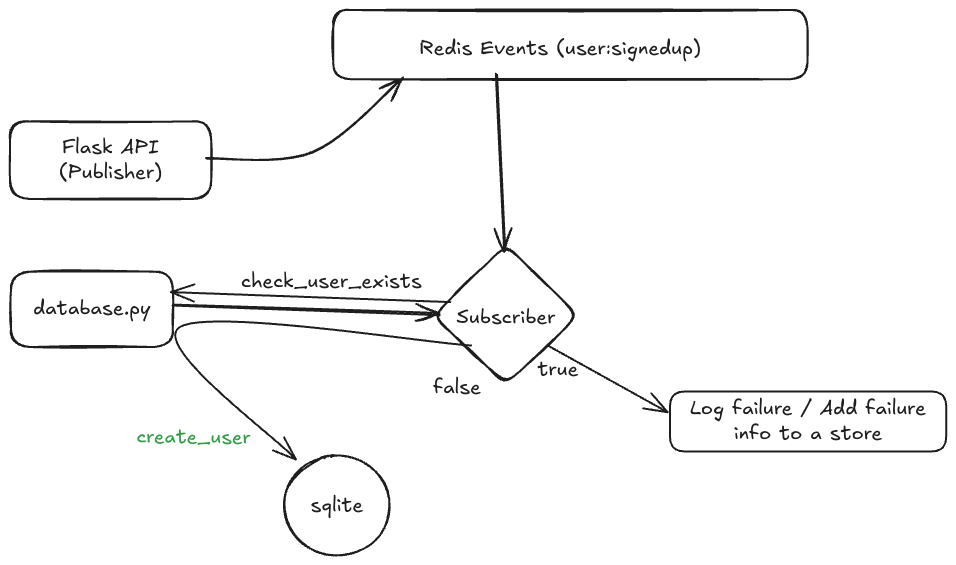

How different is this from the event based flow? Not a whole lot at the consumption end, if you look at the architecture below.

Where the two vastly differ would be in terms of what we wish to get out of this system.

Want at most once delivery? Looking to implement some kind of real-time short message passing? PubSub is your best bet.

Want at least once delivery? Want a retriable system? Want an audit log of your message consumption? Redis streams fits in clean.

If you have seen Kafka being used and would like to try something similar, again, Redis streams comes in handy.

Wrote a simple API and streams intergration to handle user signups. This repo contains the basic scaffold of the implementation to get you started.